IIS

Internet Information Services, also known as IIS, is a Microsoft web server that runs on Windows operating system and is used to exchange static and dynamic web content with internet users. IIS can be used to host, deploy, and manage web applications using technologies such as ASP.NET and PHP.

John Stephen Jacob Nallamu

20 Nov 2025

Installation and Initial setup for IIS

1. IIS Installation

Enable IIS Feature through Powershell and Restart the Windows Machine

dism.exe /online /enable-feature /featurename:IIS-WebServer /all

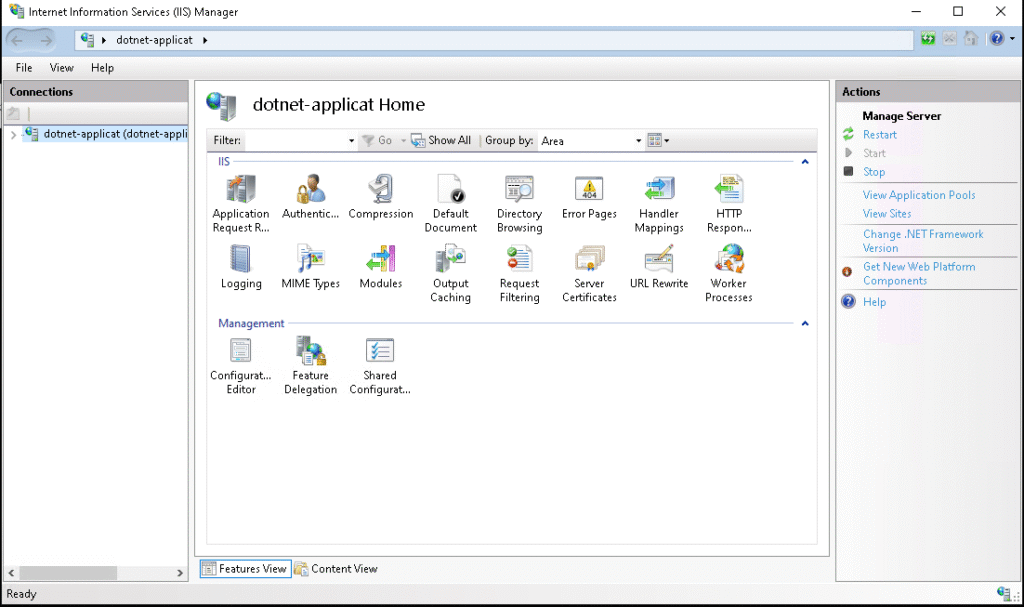

IIS something looks like this

2. Install Modules for IIS

a. Install Application Request Routing

b. Install URL Rewrite

https://learn.microsoft.com/en-us/iis/extensions/url-rewrite-module/using-url-rewrite-module-20

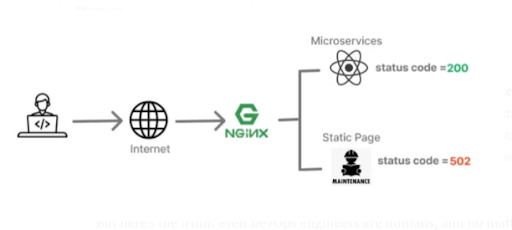

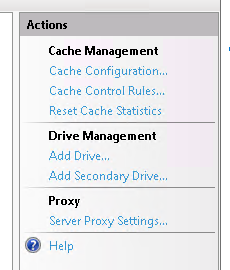

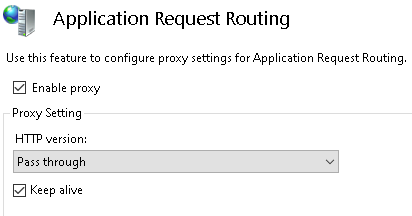

3. Setup Configuration for Reverse Proxy

a. Double click the Application Request Routing

b. Click the Server proxy settings on Actions tab

c. Check the Enable proxy tick box

d. Apply the changes in the Actions tab

Use the Reverse Proxy method to host the Node.js Application in IIS

1. Add new site

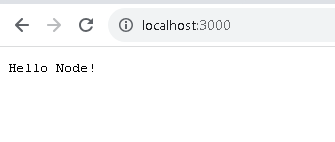

a. First step is to run the Web Application locally or on a server

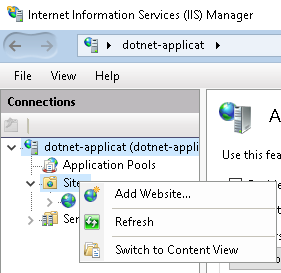

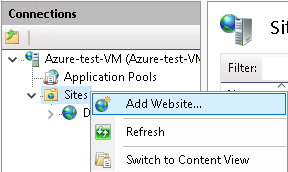

b. Then, Open IIS and right-click on the Site folder located in the Connections tab, and click the Add Website

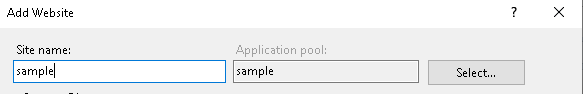

c. Enter the new name for the site

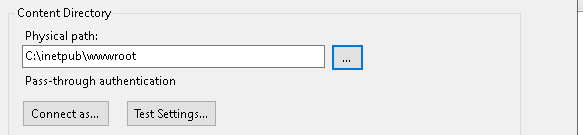

d. Add any temporary path

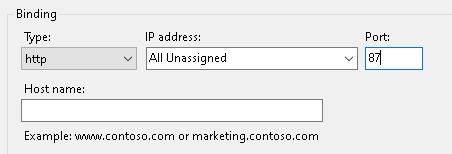

e. Set a unique port number and then click OK

2. Add the NodeJS Application

a. Press Right-click on the Site that we created before

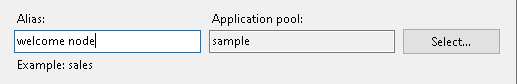

b. Enter a new name for your application

c. Browse to your Application Actual path and click OK

3. Add the NodeJS Localhost URL to IIS URL Rewrite

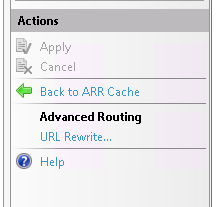

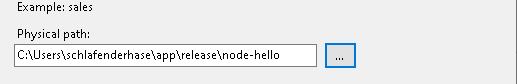

a. Select the Site you created just before and double-click on the URL Rewrite

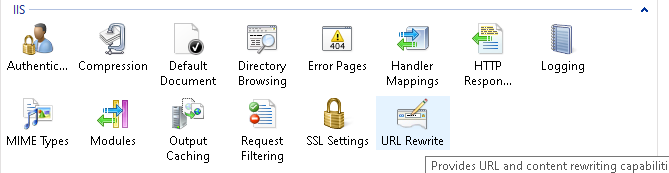

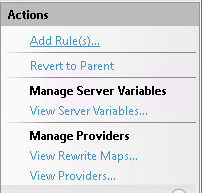

b. Double click on the Add rules which is located on Actions tab

c. Double-click on the Reverse Proxy

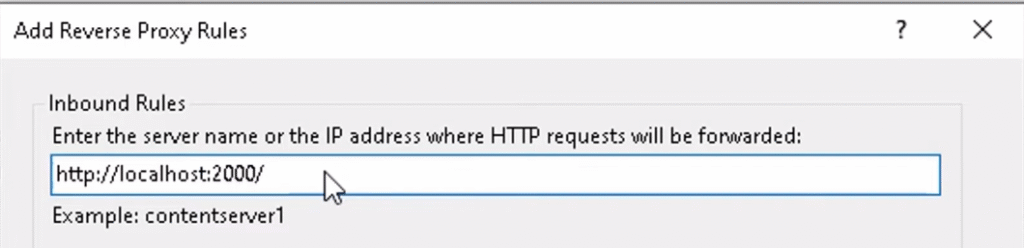

d. Put the URL of your Application and Click OK

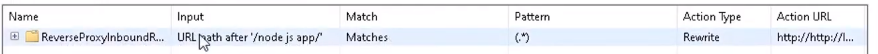

e. After creating the URL Rewrite, double-click on this Rewrite folder

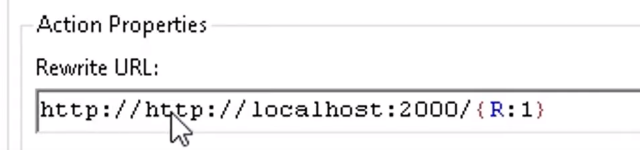

f. There is one bug that needs to be sorted inside the rewrite folder, so double-click to get inside and change the duplicate “http://” URL to http://localhost:<port number>

g. Then apply the Changes, and you will now be able to use your NodeJS application in IIS

Use the Normal Method to host a .NET Core Application on IIS

Deploy and Host an ASP.NET CORE Application on IIS Tutorial

https://youtu.be/Q_A_t7KS5Ss?si=QczaDqbpIRnMhdfi

1. Install a .NET hosting bundle installer

Download the .NET hosting bundle and run it.

https://dotnet.microsoft.com/en-us/download/dotnet/thank-you/runtime-aspnetcore-8.0.0-windows-hosting-bundle-installer

2. Add the NodeJS Application

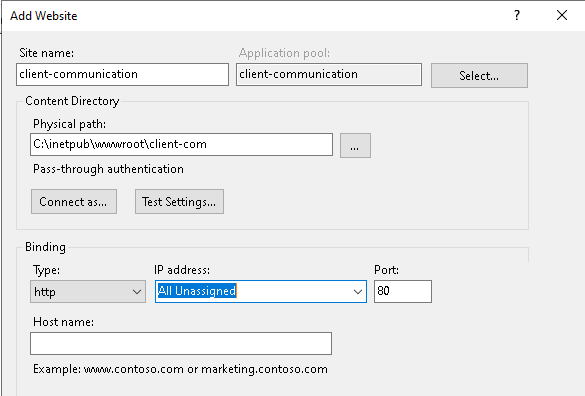

a. Right-click the Sites under the connections tab and click the Add website

b. Put any name and select the New path for application, and also, if you have any domain name, set it in the hostname

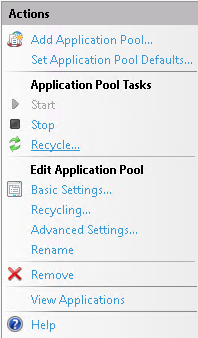

3. Configure the Application Pool

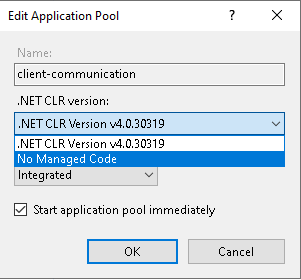

- Go to the Application Pool under the Connections tab

- And double-click the application pool you previously created

- Edit the .NET CLR version to No Manage code

4. Publish the .NET Application to the site path

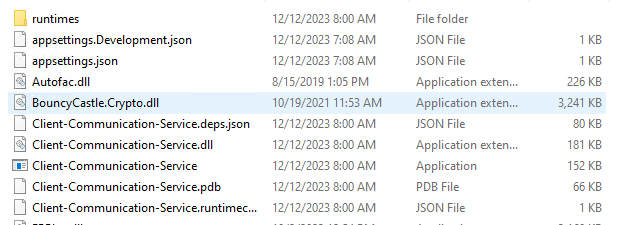

a. Locate your .NET application location, and then publish to the site path that you created before

dotnet publish -c Release -o < \path\to\sites >

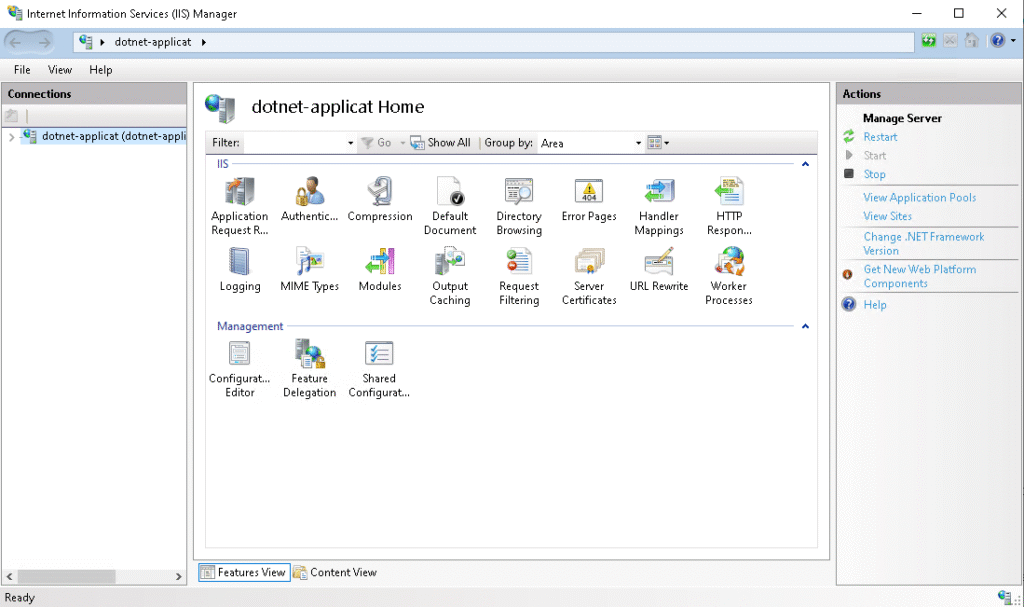

b. Check whether the published files are successfully created or not. It would be Something like this

5. Refresh the Application Pool

- Select the Application Pool that you created before

- And recycle the Application Pool under the Actions tab

Create the SSL Certificate for IIS Sites

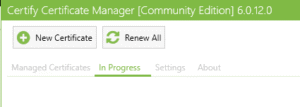

1. Download the Certify web tool

The Certify tool is used to create the SSL certificate for IIS Hosted Web sites

https://certifytheweb.com/

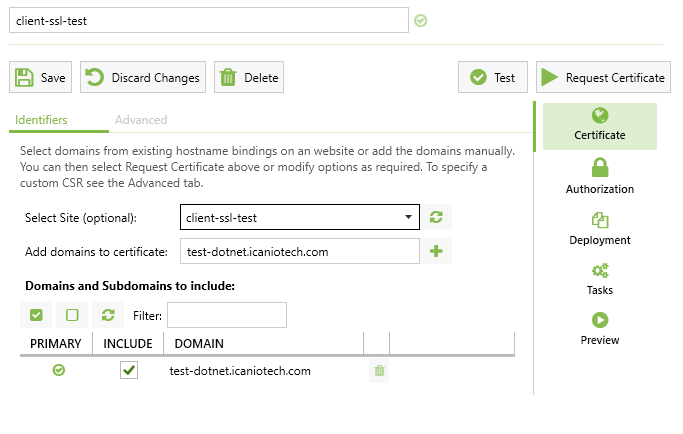

2. Create the Certificate

- Click the New Certificate

- Select your IIS Site Name in the Select site options

- And add your domain name in Add domains to certificates section

3. Check the Domain Certifications

The lock symbol indicates the SSL certification is Created Successfully